Best Crawlers and indexing settings for your Blogger website in 2023

So you've set up your blog and you're ready to start publishing content. Congratulations! In order for your readers to find your content, it's important to make sure it's correctly indexed and crawled by search engines.

In this guide, we'll walk you through the best settings for crawlers and indexing in 2023. Don't worry, even if you're not publishing content yet, these settings will help prepare your blog for the future. Let's get started!

What is search engine Crawler?

A crawler is a piece of software that "crawls" the internet, downloading websites and indexing them for search engines. This allows people to find your website when they search for related terms.

There are a number of crawlers available, but the two most popular are Googlebot and Bingbot. You can configure your website to tell these crawlers what to index and what to ignore.

What Are the Indexing Settings?

Indexing settings determine how your blog posts are found by readers and search engines. When you publish a post, you can choose to have it indexed immediately, or you can wait for it to be indexed manually.

There are Two types of indexing :- Real-time indexing: This option indexes your post as soon as it's published.

- Manual indexing: This option waits for you to manually tell Blogger to index your post.

There are pros and cons to both options. Let's take a look at each one:

Now that you know the basics of crawlers and indexing, it's time to set things up on your blog. Here are some best practices to follow.

Use a sitemap: A sitemap is a map of your website that tells crawlers where to find all of your pages. This ensures that your entire website is indexed, not just the pages that you've linked to from your blog. You can create a sitemap using a number of different tools, including Google's own Search Console and XML Sitemaps.

Restrict access to sensitive content: If you have pages on your website that you don't want indexed (such as password-protected pages), you can use the robots exclusion protocol to tell crawlers not to visit those pages. This is done by adding a special "disallow" rule to your robots.txt file.

Verify your website with Google: Verifying your website with Google allows you to manage its settings in Google Search Console, including how it's indexed and how often it's crawled. You can also use Search Console to troubleshoot any indexing issues that may occur.

These are just a few of the best practices for setting up indexing on your blog. By following them, you'll ensure that your content is seen by as many people as possible!

How to check Your Indexed Content

Now that you've got the best crawlers and indexing settings sorted out, you need to keep an eye on the content that’s actually being indexed. You can easily watch new posts, pages and other content show up in search engine results with a few simple tools.

Start by setting up Google Search Console, which is a free tool from Google that helps you understand and optimize your website's presence in search engine results. It offers detailed reports about your indexing performance, as well as insights about impressions, clicks, and position in the SERPs (search engine result pages).

You can also use other third-party tools like Ahrefs to check for new indexing activity or broken links. These tools help you crawl web pages faster and deeper than Google’s crawlers so you can get the most up-to-date information about your indexed content.

Finally, monitor what keywords your content is appearing under with tools like SEMrush or Moz Explorer. This way you can adjust keywords and optimize your SEO strategy depending on what terms are currently driving traffic to your site.

(Archieve and Search pages)

"no index"

"no dp"

POST AND PAGES

"ALL"

"NO DP"

Tips on Optimizing Your Blogger Website for Crawlers & Indexers

You want to make sure your Blogger website is optimized for crawlers and indexers. Here are a few tips to help you do that:

Use a sitemap. A sitemap is the first thing a crawler will look for when it visits your blog. This helps ensure that all the content on your website is properly indexed.

Add structured data. Structured data like meta tags will make it easier for search engine bots to understand what kind of content is on your site.

Make sure you have a mobile-friendly version of your website, as search engine bots now prioritize websites with mobile optimization.

Have unique and descriptive titles and meta descriptions for each page on your blog, as this helps crawlers understand what kind of content they can expect to find on the page.

Finally, make sure you update your content regularly to improve its chances of being found by bots and indexed correctly by search engines.

Troubleshooting Common Crawlers and indexing settings Issues in Blogger

Having crawlers and indexing issues with your Blogger site? Here are some tips that can help provide a solution.

If you’ve made changes to your content or the design of your blog, it’s possible that the crawlers are having difficulty indexing them. You should start by using a crawler simulator like Screaming Frog to test the page and see if there are any errors. If not, then you can use Google Search Console to inspect your page and re-download the sitemap.xml file if it's changed.

You can also check for any broken links or missing images that might hinder crawling, as well as look for any duplicate content that might be causing issues. Doing this will help ensure that the search engine bots are able to crawl through your content without any interruptions.

Finally, it's important to remember that Google is always updating its indexing algorithms so make sure you're staying up-to-date on all of their changes. And if all else fails, don't hesitate to reach out directly to Google and ask for help!

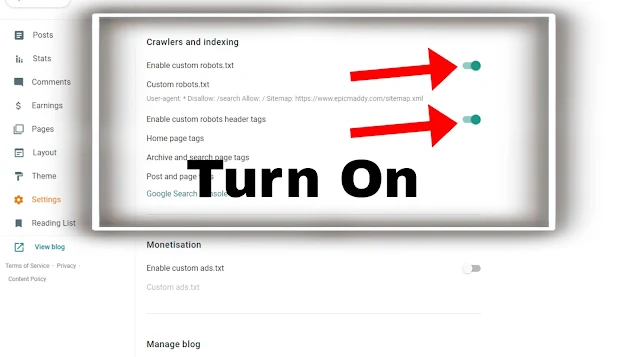

Having a well-optimized website can help ensure that your content is easily discoverable by search engines and users alike. One of the key factors in this optimization is the proper configuration of your website crawlers and indexing settings. Here, we'll go over the best crawlers and indexing settings for a Blogger website to help you get the most out of your online presence.

Use Search Engine Visibility Settings

The first step to optimizing your website's crawler and indexing settings is to make sure that search engines can find and crawl your website. To do this, go to your Blogger dashboard and select the "Settings" option, then select "Search preferences". Ensure that the "Let search engines find your blog" option is selected and save your changes.

Set up Sitemap

A sitemap is a file that lists all of the pages on your website and helps search engines understand the structure of your site. To set up a sitemap for your Blogger website, go to your dashboard and select "Settings", then select "Search preferences". Scroll down to the "Sitemap" section and select "Create a sitemap".

Use Meta Descriptions

Meta descriptions provide a brief summary of what a page is about and can help improve your website's search engine ranking. To add a meta description to your Blogger website, go to your dashboard and select "Posts", then select the post you want to edit. Scroll down to the "Search description" section and add a brief description of the post.

Utilize Header Tags

Header tags (H1, H2, H3, etc.) provide structure to your content and help search engines understand the hierarchy of information on a page. To use header tags in your Blogger website, go to your dashboard and select "Posts", then select the post you want to edit. Click the "HTML" button to switch to the HTML editor and add header tags to your content.

Monitor Your Site's Performance

Finally, it's important to monitor your website's performance to ensure that it's being properly indexed and crawled by search engines. You can use tools like Google Search Console to see how many pages on your website have been indexed, which pages are getting the most traffic, and what keywords people are using to find your site. Best Crawlers and indexing settings are listed Below For Your Blogger website.

0 Comments